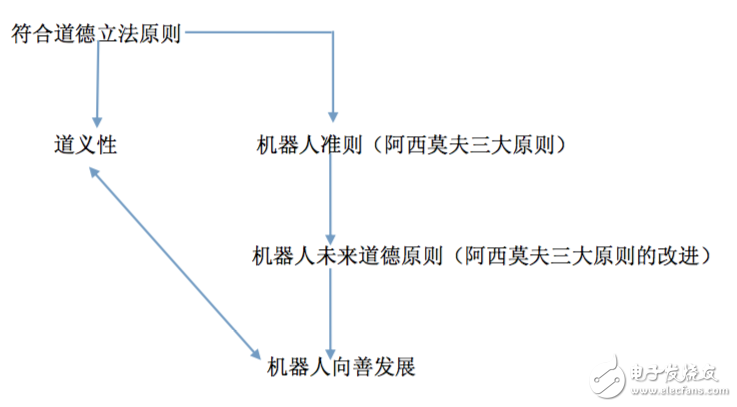

Black technology contained in the "Little Bee" killing robot Imagine that in a quiet college classroom, students are self-study in their seats. Suddenly, a group of small UFOs flying like bees swarmed in through the windows and doorways. They hovered over the classroom and seemed to be scanning and confirming. Finally, after a dull voice, a scream rang - a boy fell into a pool of blood. In just 0.5 seconds, a "small bee" poured 3 grams of explosives carried on the boy's head and the boy died on the spot. Suddenly, the classroom became a slaughterhouse, filled with screams and fear. Such a bloody scene comes from a video released by Professor Stewart Russell of the University of California. Although the killing of the "killer bee" in the video is not real, we must be clear that the technology in the video currently exists. The "killer robot ban movement" of the United Nations Convention on Certain Conventional Weapons Convention Recently, the Korea Institute of Science and Technology recently opened an artificial intelligence R&D center to develop artificial intelligence technologies for combat command, target tracking, and unmanned underwater traffic. This has caused widespread concern among industry experts, and artificial intelligence and robotics experts from 30 countries and regions have announced that they will resist the Korea Institute of Science and Technology (KAIST), which studies artificial intelligence weapons, but the university has not promised to stop research and development. As early as 2017, the staff of the Survival Threat Research Institute at Cambridge University listed 10 threats that could lead to the end of the world and human extinction. The top ones are artificial intelligence and killer robots. Military killing robots "have been for a long time" In 2012, the UN Human Rights Council Christopher Hines issued a report calling on countries to freeze the trial, production and use of “killing robotsâ€. He believes: "Robots have no human feelings. They don't understand and recognize other people's emotions. Because there is not enough legal accountability system, and robots should not have the power to determine human life and death, deploying such weapons systems may make people Can not accept." If the military killing robot is defined as "automatic machinery that can perform military operations on the battlefield without requiring human intervention, or only requires remote requirements," automatic forgings, minesweeping robots, and military drones can all be included. Moreover, the time when such things are active on the battlefield is far longer than people think, and the robot "killing" has started long ago. During the Second World War, the Germans had developed an anti-tank weapon called the Goliath Remote Bomb. This is an unmanned “pocket tank†controlled by wired remote control (or wireless remote control if necessary), equipped with a strong explosive that can directly blow up heavy tanks through the tank bottom armor. "Golia Remote Bomb" With the emergence and continuous improvement of integrated circuit technology, the size of computers has been shrinking. In the 1980s, computers could be carried in military unmanned vehicles. At the same time, the functions of the computer are constantly improving. It can complete a series of tasks such as controlling the vehicle's travel, finding the target, identifying the enemy and launching the weapon, and it is relatively stable and reliable, and can adapt to the complex and varied battlefield environment. The currently known "predator" drone is essentially a military killing robot. In addition, some countries are already developing more automated combat robots, allowing them to independently search for and select targets on the battlefield, and then decide whether to fire. MQ-9 Reaper Scouting Machine Is it humanity or ethics? this is a problem The film "Terminator" describes to us a dark future of robot killing and enslaving human beings. Although the scenes in the movie are difficult to reproduce in real life in the short term, the intelligent relativist analyst Ke Ming believes that the research and use of military killing robots, It is not only a matter of human nature, but also an ethical issue. 1. Is it a combatant or a weapon? Traditional warfare mainly occurs between the state and political groups. The main body of war is the army and the military. With the development and application of killing robots, the military is no longer the only warrior of war, and the main body of war is more complicated. Traditional artillery, tanks, and warships cannot be operated by human hands. The soldiers are the mainstay of war. The killing robot has artificial intelligence, can think, can judge, can automatically find, identify and hit the target, and autonomously complete the task given by the person, thus it can replace the military charge. If the killing robots are on the battlefield, they are real combatants. However, the killing robot is a combatant who has the ability to act but has no responsibility. It is different from the traditional military combatants and different from the weapons. Whether a murder robot is a combatant or a weapon will be difficult to define, and its ethical status is difficult to determine. 2. How to ensure security issues? The murder robot also has no feelings, does not understand and recognize the emotions of other people, and cannot recognize that the murderer is an enemy or a friend. If autonomous murder robots become a tool of war, it is difficult to guarantee that they will not kill innocent people, especially for some reasons leading to out-of-control failures. No one guarantees that it will not attack human comrades, hurting the intellectual races that create them and whose bodies are weaker than them. In fact, there is currently no effective measure to control automatic system errors. Once the program goes wrong, or is maliciously tampered with, it is likely to cause bloodshed, and human security will face great threats. If there is no restriction on the development of intelligent robots capable of killing, one day there will be such a situation: "automatic" "killing robots" will go to the battlefield and determine the life and death of human beings. 3. How to identify the enemy and me? From the cold weapon era to the present, the accidental injury caused by the poor identification of the enemy and the enemy has always been an inevitable problem on the battlefield. In modern warfare, technical means are often used to avoid accidental injuries, but if military robots are to be fully autonomous, more problems need to be solved. Human soldiers can not only judge whether a moving person is an enemy, a friendly or a civilian, but also can relatively accurately understand each other's intentions. However, for military robots, they can only distinguish between the enemy and the enemy at most, but it is often difficult to judge the other party's "mind", so it is very likely that there will be shooting and surrendering soldiers and prisoners of war, and this move is prohibited by international conventions. 4. Is it given the ability to “killâ€? Whether or not to give autonomous robots "killing ability" has always been the focus of discussion among experts and scholars including artificial intelligence and military. In 1940, the famous science fiction writer Isaac Asimov proposed the "three principles of robotics." He pointed out: "Robots must not harm humans; they must obey human instructions, but they must not contradict the first principle; they must preserve themselves, but they must not contradict the first two principles." The relationship between robot development and morality In "Second Variants," Philip Dick described the penetration of military robots into human society: after the US-Soviet nuclear war, the United States created a military robot that could assemble, learn, and cut people's throats to avoid the country. The Soviet occupation forces; but these robots continue to evolve, self-assembling new robots with the appearance of Soviet men and women soldiers and malnourished children, not only attacking humans, but also killing each other, even sneak into the secret base of the United States on the moon, buried humans Foreshadowing. Of course, if a single military robot has a procedural error, or even is interfered by an enemy or a computer virus, it is indeed possible to attack human comrades. However, if it is worried that the robot will gain self-awareness, it will still be premature to betray human beings and make their own companions. Is it Alibaba Cave or Pandora's Box? Researchers at Yale University have classified military robots as one of the most promising applications for artificial intelligence. The use of military robots is too tempting: it can reduce the risk of its own soldiers and reduce the cost of war. It saves the military's salary, housing, pension, medical expenses, etc., and is faster than humans in speed and accuracy, and does not need rest. It does not appear to be a battlefield stress disorder that only human combatants can have. It is emotionally stable and requires no mobilization. It is an instruction that can reach humans' inaccessibility and accomplish various tasks. However, if a killing robot kills innocent people, who should be responsible for it? Norel Schalji, a professor of robotics at the UK, believes that “it is obviously not the fault of the robot. The robot may fire at its computer and start to go mad. We cannot decide who should be responsible for it. For the law of war, determine responsibility. People are very important." From a humanitarian point of view, no matter what the original intention of developing a killing robot is, robot warfare should be based on the utilitarian principle of maximizing the desired effect and minimizing the collateral damage when the killing robot is effectively utilized. From the perspective of utilitarianism, murder robots do not have the instinct of human fear. No matter how high or low the intelligence is, they will be determined and will not be afraid of danger. The killing robot also does not require personnel and the corresponding life support equipment. Even if it is destroyed, there is no problem of death. Just replace it with a new one and the cost can be greatly reduced. The use of killing robots instead of soldier positions for reconnaissance and mine clearance and combat missions in the battlefield also helps to liberate soldiers from some extremely dangerous areas, reduce battlefield casualties, and reduce the cost and burden of military activities. However, the killing robot can automatically find and attack the target on the battlefield like a human soldier, so the out-of-control robot can bring great disaster to mankind. In addition, if countries around the world launch an arms race on killing robots, it will greatly increase military spending. Therefore, on the whole, the utilitarianism brought about by the use of killing robots is extremely limited, but it will bring great threats to human beings, and the disadvantages are far greater than the benefits. In any case, we will have a hard time understanding that the development and use of killing robots will help increase human interests. It is true that the development of military killing robots will lead to great changes in the military field. The analyst of intelligent relativism Ke Ming believes that the development of military robots is the embodiment of artificial intelligence applied to the military field. Whether it is Alibaba Cave or Pandora's Box, human beings are needed. It is necessary to identify itself. Only by truly applying technology and serving human development can we truly build the world's "Alibaba Cave". Network Accessories, Network Products, Network Items, Network Equipment, Internet Accessories Chinasky Electronics Co., Ltd. , https://www.chinacctvproducts.com